Oct 18th, 2025 ago I was invited by Sigit Dewanto to become a speaker (again) in the regular Python Jogja community event. This is the third time I have been invited as a speaker. This time, the theme was about leveraging AI in daily work and was held at the Faculty of Science and Technology in UIN SUKA Yogyakarta. I propose to talk about Context Engineering. Something that I have experimented with a lot for the last couple of months.

In my opinion, AI has changed how we do our work, especially for programmers. From code completion to code documentation, everything can be done using the AI assistant. I know not everyone agrees with working with AI, but at least we could admit it makes our lives a little bit easier than before.

In my talks, I was introducing the Context Engineering concept and how they could incorporate the ideas into their own project.

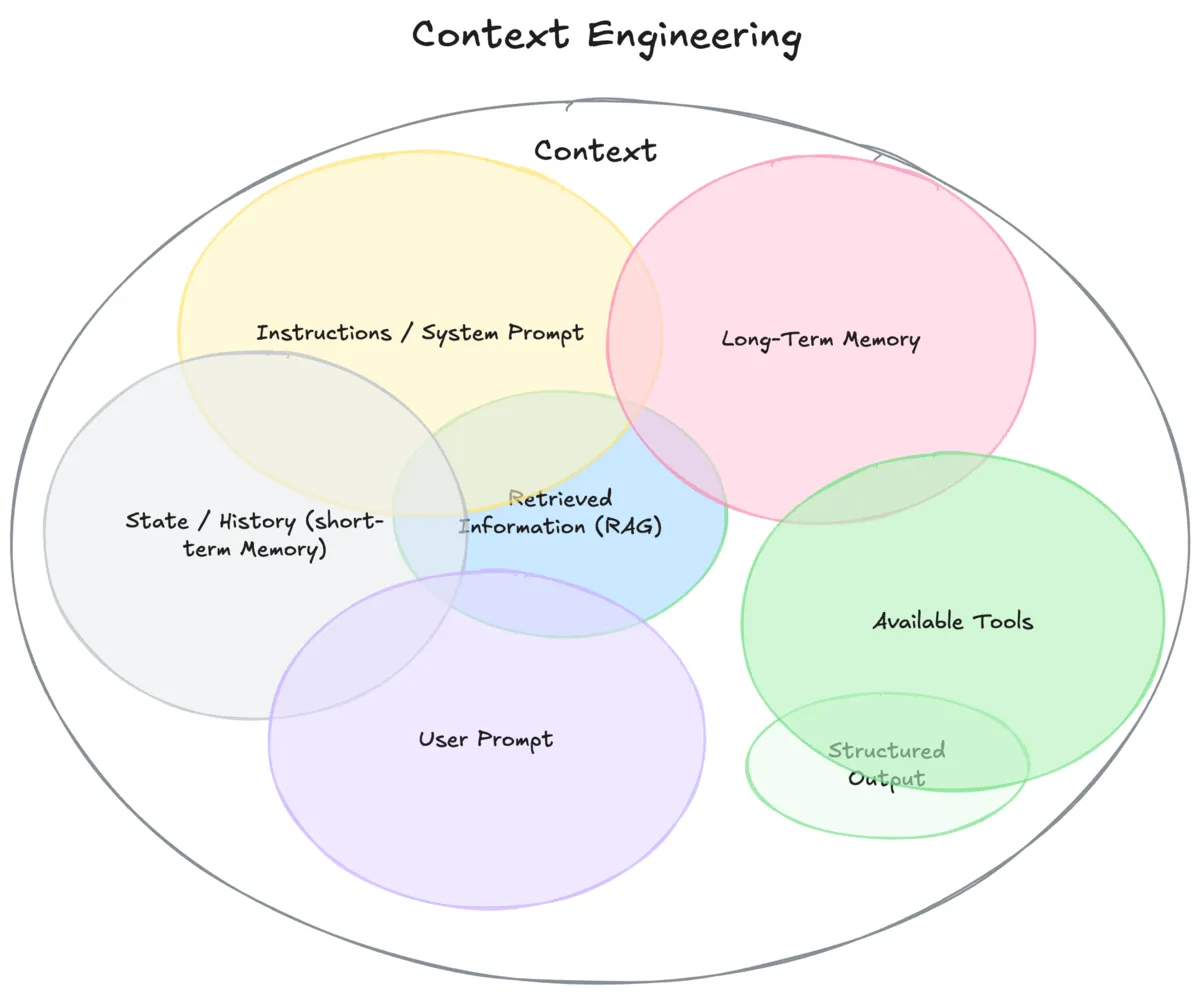

I am explaining what and why people who work with LLM should know about Context Engineering. In short, every text that we feed to AI is context. While the engineering part is about utilizing available features and tools, such as the Model Context Protocol (MCP), to organize and access proper context to make the AI work better with our project.

So what I did I showcase how the audience could use AI tools like Claude Code or Open Code and utilizing features like custom commands, custom agent, and also integrating MCPs to their AI tools.

In one of the use cases, I show how they could use AI properly beyond just vibing. To get a more accurate result, I suggesting the audience to have a proper context in place. Features like command or agent are powerful and reusable. You just have to write it once, reuse it on every project you have, and refine it along the way.

In the first use cases, I show them how they can add a new feature to an existing application using Claude Code.

-

I created a command for specification generation called

/write_speccommand and also integrated Serena MCP for doing the document retrieval. Instead of using Vector DB, I prefer to have local and document-based information to AI can retrieve from. Basically, Serena MCP would remember the project convention and structure in its memory, that actually just a collection of markdown files stored in a.serena/memories/directory, and then the memory would be accessed using MCP tools /read_memories -

I ask AI to generate the new feature specification using

/write_speccommand using this prompt below> /write_specs I want to add audio version for each blog posts. The audio would be uploaded manually into the project repository, just like what I did on the image uploading. Maybe update the blog frontmatter too and show the audio component if the audio is specified in the frontmatter. To give you context, the feature that I asked for was adding an audio player for my personal blog so users can listen to it. -

I review the specs, modify them manually, and ask AI to implement the feature.

-

Lastly, I would review the code before pushing it into the main branch in github repository.

In this process, I just have to send two prompts: 1. for generating the specs, and 2. for implementing the feature. The AI already knows everything about my project’s structure, tech stacks, conventions, and where the feature should be implemented without explicitly telling it.

Contrast with vibe coding, which just throws a prompt and blindly trusts what the AI suggests. Context Engineering requires more effort for managing the context using the existing tools and reviewing what AI has generated.

The reason it uses the term engineering is that people who work in AI right now keep figuring out how they can refine the workflow of using AI and make it better and better.

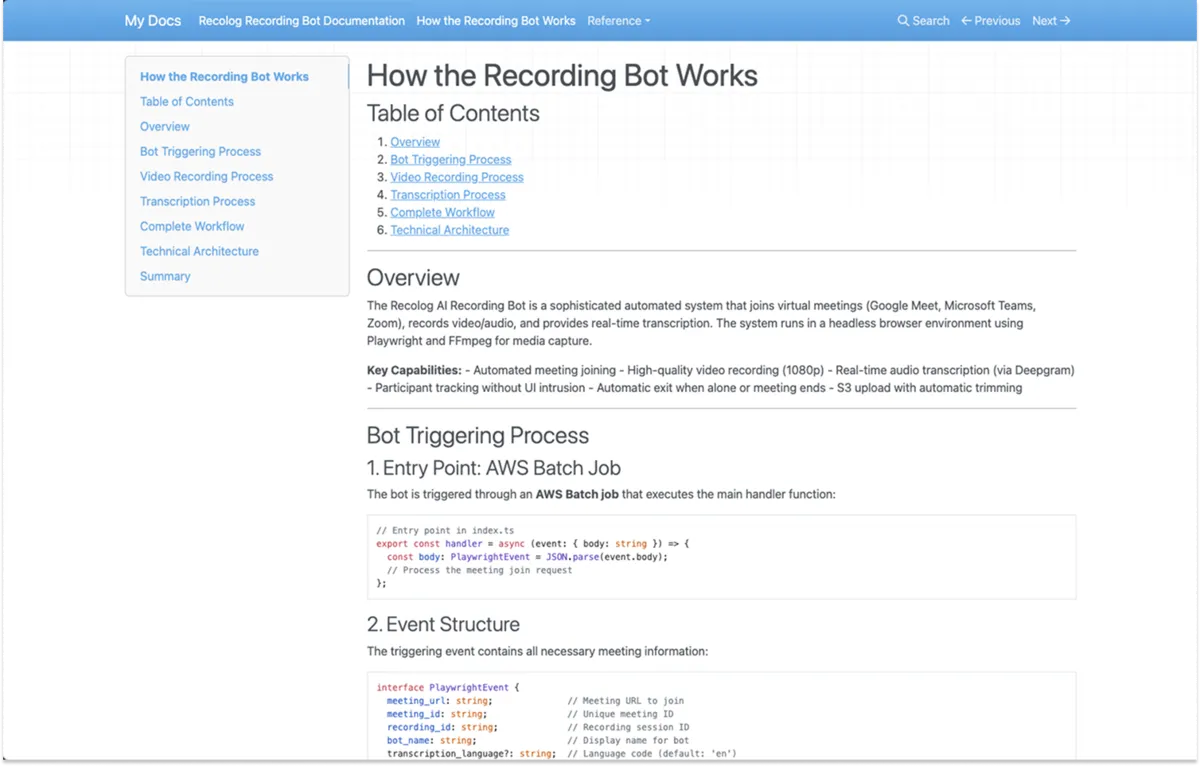

In my other use cases, I show the audience how they can generate comprehensive code documentation and read it using a Python library, mkdocs.

The installation of mkdocs is pretty easy. You just have to pip install mkdocs, initialize the project, and run it as I show you below.

pip install mkdocs

mkdocs init [project] # replace the [project] with the doc directory name you want

mkdocs build # to build the docs and generating site files

mkdocs serve # to serve the application, by default it would running on http://localhost:8000Here’s the more detailed step-by-step on how I use AI for generating the code documentation:

-

I prepare the mkdocs application using the commands above

-

Create a custom agent specializing in code documentation called code-documenter. In this step, I use an opencode agent create command combined with Context7 MCP to create the code-documenter agent for me. You can also use something else, like ChatGPT or Claude Desktop. See the agent description I used in the process of generating the code-documenter agent using Open Code.

You are a code documenter. You would help with documenting and explaining the user project. Find variables, classes, and functions for the specific logic or function the user ask for. And then organize it in './docs/docs/' directory. Use context7 tools and learn about how to properly structure or organize docs in mkdocs. -

I ask AI to document some functions of one of my projects.

> use code-documenter subagent and help me document the @batch/app/index.ts then write me how actually the bot is being triggered, record the video, and also the create the transcription

With just one-shot prompting, I could have a comprehensive documentation of my project, and I can read it in a convenient way using my browser that is hosted by mkdocs and running at http://localhost:8000

***

I hope the talks can give some insight to others who are coming, and I hope I can contribute more in the future.

Thank you for reading.